Here is our scoring and ranking methodology. Knowing how we score each category when evaluating headphones helps you understand the scores and ratings.

Last updated: October 2023

The goal is to transparently communicate our behind-the-scenes systematic approach to evaluating headphones. Our methodology is created to ensure each pair of headphones is assessed fairly and thoroughly. This way, our reviews are reliable and comparable. So you, the reader, can know what to expect.

This article serves as a testament to our commitment to providing accurate, data-backed, unbiased, and expert-supported content to you, our reader.

Read more on how we test.

Evaluation Criteria

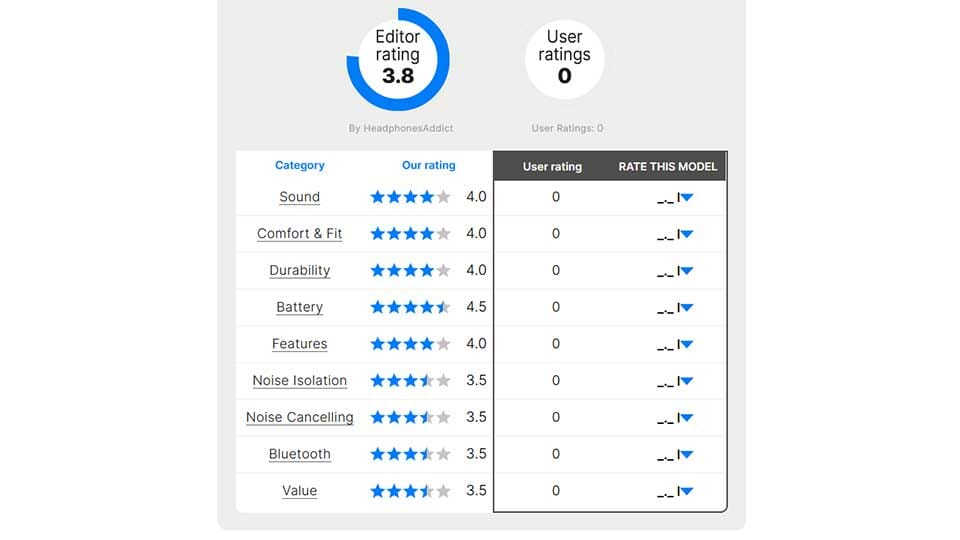

The Sonic9Score: We evaluate each pair of headphones on 9 different key points:

- Audio quality (Sound)

- Comfort & Fit

- Durability

- Battery life

- Features & Mic (App, spatial audio, controls, game mode, microphone quality, etc.)

- Noise Isolation

- Active Noise Cancellation

- Bluetooth Connection (Wireless connection)

- Value (Is it a good value for money?)

We score each category from 1 to 5 (5 being the best).

We omit scoring a category when it isn’t relevant.

For example:

It makes no sense to score Truthear x Crinacle Zero:Red (a wired pair of in-ear headphones) on a “wireless connection”.

Or Apple AirPods 3 (earbuds without ANC), on the effectiveness of “active noise cancellation”.

Doing this would skew the final scoring and rank. By omitting irrelevant categories, the scores are comparable.

Evaluating sound quality

We test by combining objective measurements and subjective listening by the tester in 5 different sub-categories:

- Bass

- Mid range

- Treble

- Soundstage

- Imaging

Learn about the sound testing details here.

The way we score is to deduct points from a perfect score of 5.0.

For each imperfection in sound and the impact of it, the score is deducted.

- 0.5 points for smaller imperfections.

- 1.0 or 1.5 for bigger flaws.

For example:

If the sound quality follows the Harman curve in the reproduction of bass, mids, and treble and has good imaging but a tiny soundstage. We deduct a point. The end score for audio quality would be 4.0.

Evaluating comfort & fit

These are the questions we ask:

- How do the headphones feel on the head?

- How comfortable do the headphones feel over time?

- How stable are the headphones during movement?

Headphones with a 5.0 feel and stay comfortable from the beginning and keep on your head without problems.

We especially focus on fit stability for sports-oriented headphones, as that is the main criterion for quality workout headphones.

For example:

Because the Sony WH-1000XM5 headphones have thin padding and create heat (and sweat) over time, they lost 0.5 points for each fault. The end score is 4.0 for the “Comfort & Fit” category.

Evaluating durability

The durability score depends on the quality of materials, water protection, and design.

Cheap materials are easy to spot. We’ve personally ruined many headphones, so we know what to look for.

The most common reasons for deducted points are cheap plastics and flimsy construction.

Taking the headphones into your hands and feeling the quality of materials and their construction gives us a good idea of how well they’re made. Testing hundreds of pairs helps with that.

For example:

TOZO NC7 earbuds have a 4.0 “durability” score.

- They lost 0.5 points for having lower water protection than alternatives (IPX6 while many similar earbuds are IPX7+)

- And they lost 0.5 for coming with a flimsy charging case after testing it (the lid is attached in a flimsy way).

The only challenge is that there is no guarantee each pair of headphones is equally durable. Some pairs come faulty out of the factory. And we have no way of knowing how many. Thankfully, all new headphones come with a guarantee for these cases. You can always replace them with a new working pair.

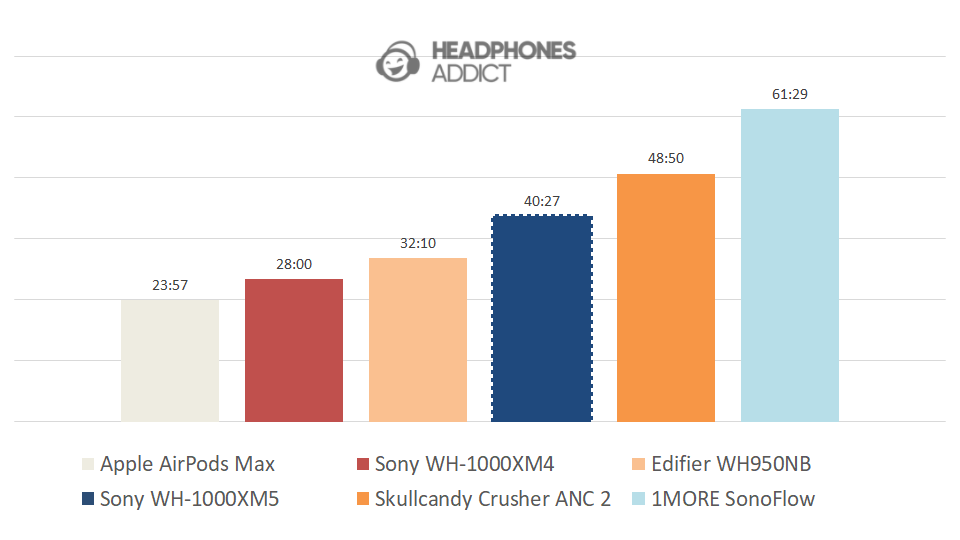

Evaluating battery life

Battery life score depends on battery life from 100% to shut down, how fast they charge, and how easy they charge (charger quality).

We compare real battery life with what the manufacturer promises and with the battery lives of headphones in the same category.

For example:

We compare Sony WH-1000XM5 with other premium, wireless, over-ear headphones.

And because they lasted over 40 hours (more than promised and close to the longest-lasting headphones) and are capable of quick charging, they got a 5.0 score.

Apple AirPods Max, which cost more, last almost half less. That’s why they have the “almost great” score of 3.5 (20h+ is still good).

Evaluating microphone quality

Microphone quality score depends on the clarity of voice during a call in quiet and noisy environments.

A perfect score requires perfect audibility in a quiet and noisy place.

The level of worsened audibility deducts points.

- Great but not perfect audibility: -0.5 points

- Good audibility: -1 point

- Between good and hard audibility: -1.5 points

- Hardly audible: -2.0 points

- Not audible: -2.5 points

Effectively, if the tester’s voice is not audible in a quiet and noisy environment, it would get a score of 0.

Here’s an example microphone test (no noise test until 0:34, background noise test from O:34-0:58):

This mic is clear and audible in all situations: Sony WH-1000XM5

Evaluating features (App, spatial audio, controls, game mode, etc.)

Features score depends on the number and quality of extra features, including app features.

Scoring and ranking includes:

- How easy is it to use the built-in controls?

- Do the features work as describer, or are they just a gimmick?

- How useful is each feature?

- How do the features compare?

Scoring and ranking features greatly depends on the category and price of the headphones.

- We expect more and better features from expensive headphones.

- We expect more features from wireless Bluetooth headphones (and less from wired).

- Each category is compared to its own category

It wouldn’t be fair to compare the features of studio headphones to premium ANC Bluetooth earbuds like the Sony’s XM series.

We don’t expect many features in a pair of studio headphones because you don’t need them for studio use. Each category of headphones is assessed based on intended use.

Evaluating noise isolation

Noise isolation score depends on how much and what frequencies are blocked during measuring.

This is compared to the best noise-isolating headphones in the category. We compared in-ear with in-ear, over-ear with over-ear, etc.

Headphones that are not made to isolate noise, like bone-conduction headphones, are not scored.

Evaluating active noise cancellation

Active noise cancelling score depends on how much ambient noise ANC removes during measuring.

The same logic as with passive noise isolation applies.

The better ANC is at cancelling noise compared to the best performers in its category, the higher the score.

Keep in mind that ANC performance keeps improving. For this reason, high ANC scores from older headphones are not comparable with the latest. The latest are compared to a better standard, so always assume they mean better ANC.

Evaluating Bluetooth connection (Wireless connection)

Scoring wireless connection is based on the range, stability and ease of connection.

While most Bluetooth headphones have similar wireless performance, some stand out (in a positive or negative way).

The best performers are used as the benchmarks for the perfect score.

So a high “Bluetooth score” means the headphones have a long range of connection. They keep stable during use without hiccups or distortion. And are easy to pair with the multipoint support.

Any deviation from the expected performance deducts points. How many points depends on the severity of the connection issues.

For example:

Skullcandy Crusher ANC 2 headphones have a 5.0 score thanks to their long range of 65ft (20m) indoors, stable connection, and multipoint support. This makes them one of the best performers in this category.

Evaluating value

Value score depends on the comparison between what YOU GET and PRICE.

The reviewer assesses all tested categories and looks at the headphones as one package to determine if they’re worth the price.

Scoring scale for “Value”:

- Exceptional value: 5.0 – The headphones’ quality significantly exceeds their price.

- Almost exceptional: 4.5 – The headphones offer everything and more than what you’d expect for the price.

- Great value: 4.0 – The headphones offer more than most alternatives in the same price range.

- Almost great 3.5 – The headphones offer more than expected but not enough for a great score.

- Good: 3.0 – The headphones offer the expected for their price.

- Average: 2.5 – The headphones offer enough to justify their price.

- Below Average: 2.0 – The headphones should have a lower price.

- Bad: 1.5 – The headphones are too expensive for what they offer.

- Avoid at all costs: 1.0 – It’s in the name.

The Value score is a subjective assessment of value, according to the reviewer. It gives a general idea of how good the headphones are compared to alternatives on the market.

How We Select The Headphones

The main criterion for choosing which headphones we review is popularity. For obvious reasons. We want to test and review headphones that people are interested in.

There are two ways we get headphones:

- The main way is buying them ourselves with our own money.

- Secondly, some brands send us free samples to review.

Buying the headphones ourselves gives us the same experience as a regular buyer (and it gets quite expensive). But it’s the best way. There is no hand-picking of products.

We usually order them online (Amazon is our friend) or local electronics shops.

And when we get free samples, we always let the sender know that they’re doing that at their own risk. We don’t hold back if their headphones suck.

Testing Environment

Our testing environment depends on what we’re trying to test. But the main goal is to be as close to everyday scenarios as possible.

What good is testing headphones in a studio and finding out how they perform if people won’t use them there?

We believe in learning how they perform in real-life situations, and that’s how we test them. Outdoors, in an office, in the streets.

The only time we want studio conditions is when measuring audio performance (using a soundproof room). We don’t want to color the measurement results with ambient noise.

You can read more about our testing environment in the “How we test” article.